Machine Learning for Natural Language Processing

Natural Language Processing (NLP) uses a lot of machine learning techniques in various tasks nowadays. In our team, we contribute to this field according to three main directions. First, we work on the development of new learning models for language learning taking into account contextual and semantic information to facilitate and improve learning. Second, we focus on methods for learning new Word Embeddings (word representations) that can be used by NLP methods. Third we investigate Deep Learning techniques for Text Summarization.

Machine Learning provides a lot of methods for dealing with structured data or text that form the basis of many Natural Language Processing techniques. In particular, we work on three main axis: (i) the problem of learning languages with the help of additional information; (ii) the improvement of word representations by learning efficient Word Embeddings; (iii) the design of Deep Learning techniques for Text Summarization.

-Language Learning with semantic and contextual information. While classic methods for language learning in grammatical inference are based on syntactic information, we rather design a system that is able to learn language models taking into account the perceptual context in which the sentences of the model are produced. The system we develop learns from pairs (Context, Sentence) where: Context is given in the form of an image whose objects have been identified, and Sentence gives a (partial) description of the image. Our approach is based on Inductive Logic Programming techniques in order to use a subset of first order logic as a common framework for knowledge representation and reasoning.

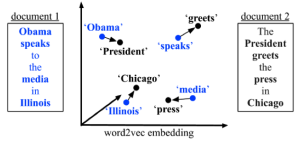

-Learning efficient Word Embeddings from structured text. Many Natural Language Processing (NLP) methods require the words or sentences to be changed into numerical vectors of real numbers. We propose to contribute to this topic by studying how to take into account external information to improve the embeddings with a special focus on deep learning methods. We also aim at developing new embeddings with binary representations to accelerate the computation efficiency in the context of NLP tasks. Another direction is to derive theoretical justifications of our methods. This research is made in collaboration with the Connected Intelligence team.

-Learning text summarizers. We work on new abstractive text summarization techniques in the context of meetings. We want to automate the process of generating minutes of meetings at the end of those meetings. Given some datasets made up of speech-to-text transcriptions of meetings and their manually generated minutes we investigate deep learning techniques to learn abstractive text summarizers from those datasets. This is a collaborative project with the GREYC lab in Caen and industrial partners: Viseo, Ho2S, Pulse Origin, GEM and Co-Work in Grenoble; Vocapia in Orsay.