Transfer Learning and Domain Adaptation

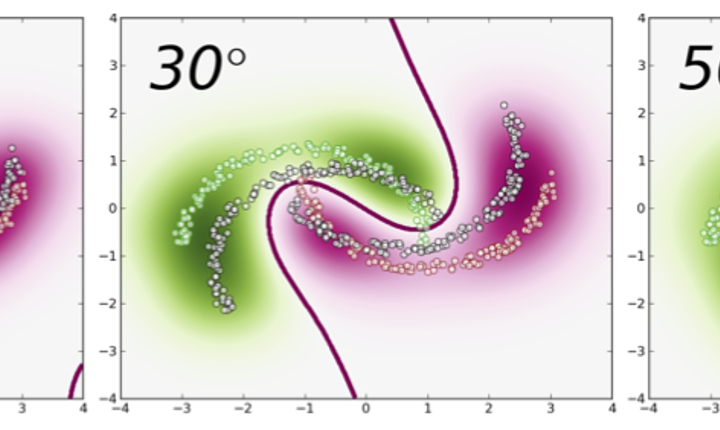

Transfer learning/domain adaptation is a research topic which addresses the problem of transferring some knowledge or some models from a given source task to a related but different target one. The Data Intelligence team works on different subproblems among which: avoiding negative transfer, scalability, lifelong learning, adaptive online learning.

Transfer learning or domain adaptation deals with the problem of transferring automatically some models (for classification or regression) from a given source task to a new target task that is related but different.

In this context, we try to address the following issues:

- How to avoid negative transfer? Negative transfer occurs when the model diverges despite the fact that the model is learned by a standard procedure consisting in minimizing jointly the source error and a divergence between source and target distributions. Designing specific divergence measure for automatic transfer and the definition of formal frameworks of transferability form some directions we investigate to avoid negative transfer.

- How to deal with scalability? We address this issue according to two main directions: (i) algorithmics with the design of online methods, the use of new and efficient norms inducing sparse models, the inference and combinaison of weak transfer rules (ii) design of theoretical frameworks with the development of a learning theory from graphs of machines where the transfer problem is highly distributed.

- How to tackle the problem of lifelong learning? Lifelong learning can be seen as a generalization of transfer learning where the knowledge acquired previously from past learning tasks can help to adapt the current model(s) to a new incoming task.

Next research and project topic >