Article: Multitemporal Speckle Reduction With Self-Supervised Deep Neural Networks

In January 2023, our Image Science & Computer Vision team published a paper in IEEE Transactions on Geoscience and Remote Sensing (TGRS). The journal publishes technical papers disclosing new and significant research related to the theory, concepts, and techniques of science and engineering as applied to sensing the land, oceans, atmosphere, and space.

Nowadays, several satellites continuously produce Synthetic Aperture Radar (SAR) images of the Earth. These images provide crucial information to monitor the forests, oceans, glaciers, and urban areas all over the world. While optical satellites are useless in the presence of clouds, SAR satellites see through the clouds and are thus very useful to detect and analyze changes. Yet, a fundamental difficulty of SAR imaging comes from the phenomenon of speckle which strongly impacts the quality of the images. This paper extends our recent self-supervised training strategy "MERLIN", based on the decomposition of complex-valued SAR images into pairs of real-valued images with statistically independent speckle, to multi- temporal filtering. We developed a statistical model of sequences of SAR images and proved that deep neural networks could learn how to suppress speckle using only noisy data. Compared to single-image speckle reduction, multi-temporal speckle filtering better preserves small structures such as roads or the boundary between fields without introducing noticeable artifacts when changes occur in the time series. This work results from a collaboration with the LTCI at Telecom Paris and the MAP5 at the Université Paris Cité.

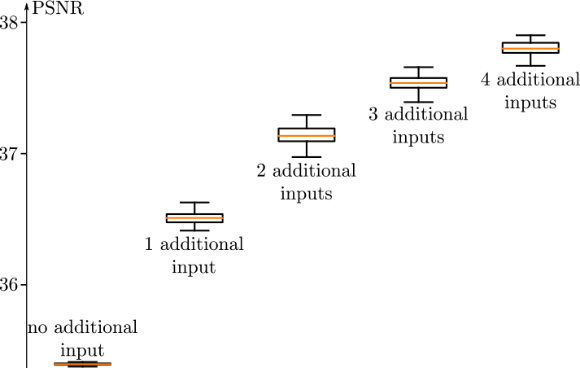

Figure 1 above: When additional images are provided to the neural network, the quality of its prediction improves, as measured on these numerical simulations by the PSNR criterion (the higher the better).

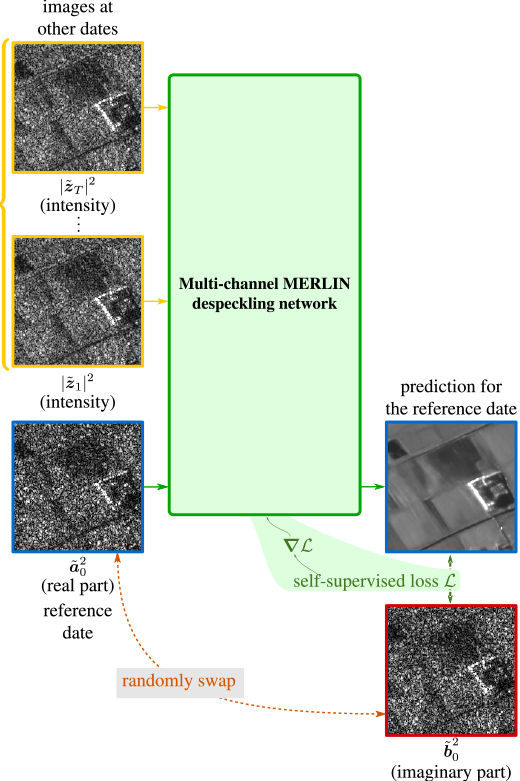

Figure 2 above:

Our neural network (green box) takes several images as input (yellow) in addition to half of the information of the reference image (blue). It produces a restored image (blue image on the right) that can be compared to the other half of the reference image (red). This guides the training of the network.

Abstract

Speckle filtering is generally a prerequisite to the analysis of synthetic aperture radar (SAR) images. Tremendous progress has been achieved in the domain of single-image despeckling. Latest techniques rely on deep neural networks to restore the various structures and textures peculiar to SAR images. The availability of time series of SAR images offers the possibility of improving speckle filtering by combining different speckle realizations over the same area. The supervised training of deep neural networks requires ground-truth speckle-free images. Such images can only be obtained indirectly through some form of averaging, by spatial or temporal integration, and are imperfect. Given the potential of very high quality restoration reachable by multi-temporal speckle filtering, the limitations of ground-truth images need to be circumvented. We extend a recent self-supervised training strategy for single-look complex SAR images, called MERLIN, to the case of multi-temporal filtering. This requires modeling the sources of statistical dependencies in the spatial and temporal dimensions as well as between the real and imaginary components of the complex amplitudes. Quantitative analysis on datasets with simulated speckle indicates a clear improvement of speckle reduction when additional SAR images are included. Our method is then applied to stacks of TerraSAR-X images and shown to outperform competing multitemporal speckle filtering approaches. The code of the trained models and supplementary results are made freely available here.

Read the full article here