String representations in deep networks

Convolutional Neural Network (CNN) features learned from ImageNet were shown to be generic and very efficient in various computer vision tasks as image classification or object recognition. However they lack flexibility to take into account variations in the spatial layout of visual elements:

- They use a fixed input image size.

- The coding of spatial layout is fixed and learned on the training dataset.

- At classification step a rigid matching is performed.

We propose to represent images as as strings of pretrained CNN features. Pretrained features are extracted from the last convolution layer of the CNN and they are pooled across spatial bins to get vertical strings. Similarities between two images are then computed using new edit distance variants adapted to flexible image comparison. For example, the merge-based edit distance allows to merge similar subsequent symbols in the string.

This representation can efficiently be used for image classification by plugging the edit distance into an SVM kernel and by training a model on the target dataset. This method outperforms state of the arts approaches where the CNN is fined tunned on the target dataset. Advantages are:

- The use of a pre-trained CNN.

- A single pass in feature extraction step with variable input image size.

- Can be achieved quickly in a standard CPU.

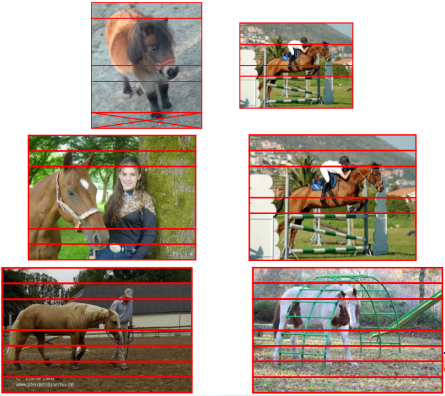

The following figure illustrates the flexibility and relevance of image alignment provided by merge-based edit distance.

Examples of image alignments obtained by the merge-based edit distance on images extracted from Pascal VOC 2007 dataset.

C. Barat and C.Ducottet, String representations and distances in deep convolutional neural networks for image classification, Pattern Recognition, vol 54, pp 104-115, June 2016.